TextDecoder и TextEncoder

Что если бинарные данные фактически являются строкой? Например, мы получили файл с текстовыми данными.

Встроенный объект TextDecoder позволяет декодировать данные из бинарного буфера в обычную строку.

Для этого прежде всего нам нужно создать сам декодер:

let decoder = new TextDecoder([label], [options]);- label – тип кодировки, utf-8 используется по умолчанию, но также поддерживаются big5 , windows-1251 и многие другие.

- options – объект с дополнительными настройками:

- fatal – boolean, если значение true , тогда генерируется ошибка для невалидных (не декодируемых) символов, в ином случае (по умолчанию) они заменяются символом \uFFFD .

- ignoreBOM – boolean, если значение true , тогда игнорируется BOM (дополнительный признак, определяющий порядок следования байтов), что необходимо крайне редко.

…и после использовать его метод decode:

let str = decoder.decode([input], [options]);- input – бинарный буфер ( BufferSource ) для декодирования.

- options – объект с дополнительными настройками:

- stream – true для декодирования потока данных, при этом decoder вызывается вновь и вновь для каждого следующего фрагмента данных. В этом случае многобайтовый символ может иногда быть разделён и попасть в разные фрагменты данных. Это опция указывает TextDecoder запомнить символ, на котором остановился процесс, и декодировать его со следующим фрагментом.

let uint8Array = new Uint8Array([72, 101, 108, 108, 111]); alert( new TextDecoder().decode(uint8Array) ); // Hellolet uint8Array = new Uint8Array([228, 189, 160, 229, 165, 189]); alert( new TextDecoder().decode(uint8Array) ); // 你好Мы можем декодировать часть бинарного массива, создав подмассив:

let uint8Array = new Uint8Array([0, 72, 101, 108, 108, 111, 0]); // Возьмём строку из середины массива // Также обратите внимание, что это создаёт только новое представление без копирования самого массива. // Изменения в содержимом созданного подмассива повлияют на исходный массив и наоборот. let binaryString = uint8Array.subarray(1, -1); alert( new TextDecoder().decode(binaryString) ); // HelloTextEncoder

TextEncoder поступает наоборот – кодирует строку в бинарный массив.

Имеет следующий синтаксис:

Unicode in JavaScript

If not specified otherwise, the browser assumes the source code of any program to be written in the local charset, which varies by country and might give unexpected issues. For this reason, it’s important to set the charset of any JavaScript document.

How do you specify another encoding, in particular UTF-8, the most common file encoding on the web?

If the file contains a BOM character, that has priority on determining the encoding. You can read many different opinions online, some say a BOM in UTF-8 is discouraged, and some editors won’t even add it.

This is what the Unicode standard says:

… Use of a BOM is neither required nor recommended for UTF-8, but may be encountered in contexts where UTF-8 data is converted from other encoding forms that use a BOM or where the BOM is used as a UTF-8 signature.

This is what the W3C says:

In HTML5 browsers are required to recognize the UTF-8 BOM and use it to detect the encoding of the page, and recent versions of major browsers handle the BOM as expected when used for UTF-8 encoded pages. — https://www.w3.org/International/questions/qa-byte-order-mark

If the file is fetched using HTTP (or HTTPS), the Content-Type header can specify the encoding:

Content-Type: application/javascript; charset=utf-8If this is not set, the fallback is to check the charset attribute of the script tag:

script src="./app.js" charset="utf-8">If this is not set, the document charset meta tag is used:

. head> meta charset="utf-8" /> head> .The charset attribute in both cases is case insensitive (see the spec)

Public libraries should generally avoid using characters outside the ASCII set in their code, to avoid it being loaded by users with an encoding that is different than their original one, and thus create issues.

How JavaScript uses Unicode internally

While a JavaScript source file can have any kind of encoding, JavaScript will then convert it internally to UTF-16 before executing it.

JavaScript strings are all UTF-16 sequences, as the ECMAScript standard says:

When a String contains actual textual data, each element is considered to be a single UTF-16 code unit.

Using Unicode in a string

A unicode sequence can be added inside any string using the format \uXXXX :

A sequence can be created by combining two unicode sequences:

Notice that while both generate an accented e, they are two different strings, and s2 is considered to be 2 characters long:

And when you try to select that character in a text editor, you need to go through it 2 times, as the first time you press the arrow key to select it, it just selects half element.

You can write a string combining a unicode character with a plain char, as internally it’s actually the same thing:

const s3 = 'e\u0301' //é s3.length === 2 //true s2 === s3 //true s1 !== s3 //trueNormalization

Unicode normalization is the process of removing ambiguities in how a character can be represented, to aid in comparing strings, for example.

Like in the example above:

const s1 = '\u00E9' //é const s3 = 'e\u0301' //é s1 !== s3ES6/ES2015 introduced the normalize() method on the String prototype, so we can do:

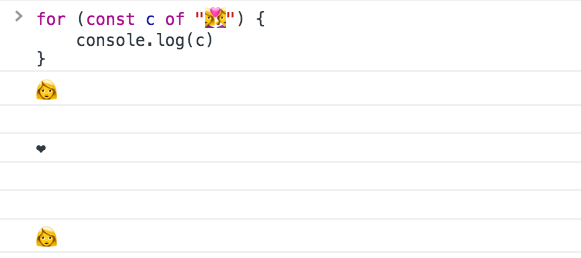

s1.normalize() === s3.normalize() //trueEmojis

Emojis are fun, and they are Unicode characters, and as such they are perfectly valid to be used in strings:

Emojis are part of the astral planes, outside of the first Basic Multilingual Plane (BMP), and since those points outside BMP cannot be represented in 16 bits, JavaScript needs to use a combination of 2 characters to represent them

The 🐶 symbol, which is U+1F436 , is traditionally encoded as \uD83D\uDC36 (called surrogate pair). There is a formula to calculate this, but it’s a rather advanced topic.

Some emojis are also created by combining together other emojis. You can find those by looking at this list https://unicode.org/emoji/charts/full-emoji-list.html and notice the ones that have more than one item in the unicode symbol column.

👩❤️👩 is created combining 👩 ( \uD83D\uDC69 ), ❤️ ( \u200D\u2764\uFE0F\u200D ) and another 👩 ( \uD83D\uDC69 ) in a single string: \uD83D\uDC69\u200D\u2764\uFE0F\u200D\uD83D\uDC69

There is no way to make this emoji be counted as 1 character.

Get the proper length of a string

You’ll get 8 in return, as length counts the single Unicode code points.

Also, iterating over it is kind of funny:

And curiously, pasting this emoji in a password field it’s counted 8 times, possibly making it a valid password in some systems.

How to get the “real” length of a string containing unicode characters?

One easy way in ES6+ is to use the spread operator:

You can also use the Punycode library by Mathias Bynens:

require('punycode').ucs2.decode('🐶').length //1(Punycode is also great to convert Unicode to ASCII)

Note that emojis that are built by combining other emojis will still give a bad count:

require('punycode').ucs2.decode('👩❤️👩').length //6 [. '👩❤️👩'].length //6If the string has combining marks however, this still will not give the right count. Check this Glitch https://glitch.com/edit/#!/node-unicode-ignore-marks-in-length as an example.

(you can generate your own weird text with marks here: https://lingojam.com/WeirdTextGenerator)

Length is not the only thing to pay attention. Also reversing a string is error prone if not handled correctly.

ES6 Unicode code point escapes

ES6/ES2015 introduced a way to represent Unicode points in the astral planes (any Unicode code point requiring more than 4 chars), by wrapping the code in graph parentheses:

The dog 🐶 symbol, which is U+1F436 , can be represented as \u instead of having to combine two unrelated Unicode code points, like we showed before: \uD83D\uDC36 .

But length calculation still does not work correctly, because internally it’s converted to the surrogate pair shown above.

Encoding ASCII chars

The first 128 characters can be encoded using the special escaping character \x , which only accepts 2 characters:

This will only work from \x00 to \xFF , which is the set of ASCII characters.

Utf 8 code in javascript

utf8.js is a well-tested UTF-8 encoder/decoder written in JavaScript. Unlike many other JavaScript solutions, it is designed to be a proper UTF-8 encoder/decoder: it can encode/decode any scalar Unicode code point values, as per the Encoding Standard. Here’s an online demo.

Feel free to fork if you see possible improvements!

Encodes any given JavaScript string ( string ) as UTF-8, and returns the UTF-8-encoded version of the string. It throws an error if the input string contains a non-scalar value, i.e. a lone surrogate. (If you need to be able to encode non-scalar values as well, use WTF-8 instead.)

// U+00A9 COPYRIGHT SIGN; see http://codepoints.net/U+00A9utf8.encode('\xA9');// → '\xC2\xA9'// U+10001 LINEAR B SYLLABLE B038 E; see http://codepoints.net/U+10001utf8.encode('\uD800\uDC01');// → '\xF0\x90\x80\x81'Decodes any given UTF-8-encoded string ( byteString ) as UTF-8, and returns the UTF-8-decoded version of the string. It throws an error when malformed UTF-8 is detected. (If you need to be able to decode encoded non-scalar values as well, use WTF-8 instead.)

utf8.decode('\xC2\xA9');// → '\xA9'utf8.decode('\xF0\x90\x80\x81');// → '\uD800\uDC01'// → U+10001 LINEAR B SYLLABLE B038 EA string representing the semantic version number.

utf8.js has been tested in at least Chrome 27-39, Firefox 3-34, Safari 4-8, Opera 10-28, IE 6-11, Node.js v0.10.0, Narwhal 0.3.2, RingoJS 0.8-0.11, PhantomJS 1.9.0, and Rhino 1.7RC4.

Unit tests & code coverage

After cloning this repository, run npm install to install the dependencies needed for development and testing. You may want to install Istanbul globally using npm install istanbul -g .

Once that’s done, you can run the unit tests in Node using npm test or node tests/tests.js . To run the tests in Rhino, Ringo, Narwhal, PhantomJS, and web browsers as well, use grunt test .

To generate the code coverage report, use grunt cover .

Why is the first release named v2.0.0? Haven’t you heard of semantic versioning?

Long before utf8.js was created, the utf8 module on npm was registered and used by another (slightly buggy) library. @ryanmcgrath was kind enough to give me access to the utf8 package on npm when I told him about utf8.js. Since there has already been a v1.0.0 release of the old library, and to avoid breaking backwards compatibility with projects that rely on the utf8 npm package, I decided the tag the first release of utf8.js as v2.0.0 and take it from there.

utf8.js is available under the MIT license.