nltk.tokenize package¶

Tokenizers divide strings into lists of substrings. For example, tokenizers can be used to find the words and punctuation in a string:

>>> from nltk.tokenize import word_tokenize >>> s = '''Good muffins cost $3.88\nin New York. Please buy me . two of them.\n\nThanks.''' >>> word_tokenize(s) ['Good', 'muffins', 'cost', '$', '3.88', 'in', 'New', 'York', '.', 'Please', 'buy', 'me', 'two', 'of', 'them', '.', 'Thanks', '.']

This particular tokenizer requires the Punkt sentence tokenization models to be installed. NLTK also provides a simpler, regular-expression based tokenizer, which splits text on whitespace and punctuation:

>>> from nltk.tokenize import wordpunct_tokenize >>> wordpunct_tokenize(s) ['Good', 'muffins', 'cost', '$', '3', '.', '88', 'in', 'New', 'York', '.', 'Please', 'buy', 'me', 'two', 'of', 'them', '.', 'Thanks', '.']

We can also operate at the level of sentences, using the sentence tokenizer directly as follows:

>>> from nltk.tokenize import sent_tokenize, word_tokenize >>> sent_tokenize(s) ['Good muffins cost $3.88\nin New York.', 'Please buy me\ntwo of them.', 'Thanks.'] >>> [word_tokenize(t) for t in sent_tokenize(s)] [['Good', 'muffins', 'cost', '$', '3.88', 'in', 'New', 'York', '.'], ['Please', 'buy', 'me', 'two', 'of', 'them', '.'], ['Thanks', '.']]

Caution: when tokenizing a Unicode string, make sure you are not using an encoded version of the string (it may be necessary to decode it first, e.g. with s.decode(«utf8») .

NLTK tokenizers can produce token-spans, represented as tuples of integers having the same semantics as string slices, to support efficient comparison of tokenizers. (These methods are implemented as generators.)

>>> from nltk.tokenize import WhitespaceTokenizer >>> list(WhitespaceTokenizer().span_tokenize(s)) [(0, 4), (5, 12), (13, 17), (18, 23), (24, 26), (27, 30), (31, 36), (38, 44), (45, 48), (49, 51), (52, 55), (56, 58), (59, 64), (66, 73)]

There are numerous ways to tokenize text. If you need more control over tokenization, see the other methods provided in this package.

For further information, please see Chapter 3 of the NLTK book.

nltk.tokenize. sent_tokenize ( text , language = ‘english’ ) [source] ¶

Return a sentence-tokenized copy of text, using NLTK’s recommended sentence tokenizer (currently PunktSentenceTokenizer for the specified language).

- text – text to split into sentences

- language – the model name in the Punkt corpus

Return a tokenized copy of text, using NLTK’s recommended word tokenizer (currently an improved TreebankWordTokenizer along with PunktSentenceTokenizer for the specified language).

- text (str) – text to split into words

- language (str) – the model name in the Punkt corpus

- preserve_line (bool) – A flag to decide whether to sentence tokenize the text or not.

sent_tokenize

Natural Language Processing with Python NLTK is one of the leading platforms for working with human language data and Python, the module NLTK is used for natural language processing. NLTK is literally an acronym for Natural Language Toolkit.

In this article you will learn how to tokenize data (by words and sentences).

Install NLTK

Install NLTK with Python 3.x using:

Installation is not complete after these commands. Open python and type:

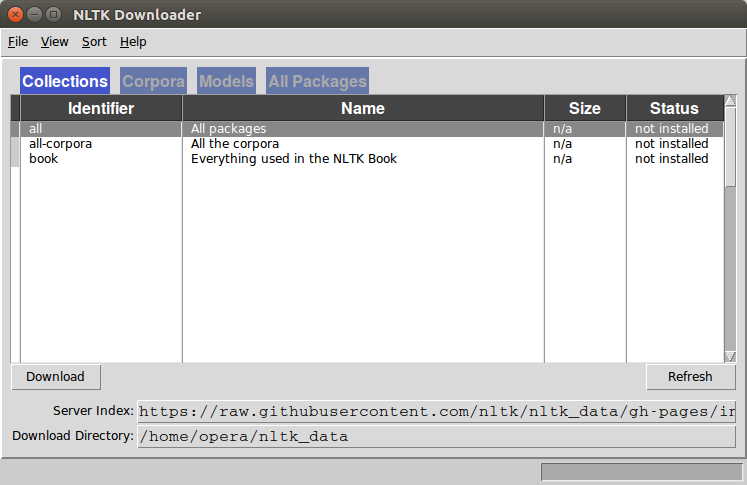

A graphical interface will be presented:

Click all and then click download. It will download all the required packages which may take a while, the bar on the bottom shows the progress.

Tokenize words

from nltk.tokenize import sent_tokenize, word_tokenize

data = «All work and no play makes jack a dull boy, all work and no play»

print(word_tokenize(data))

[‘All’, ‘work’, ‘and’, ‘no’, ‘play’, ‘makes’, ‘jack’, ‘dull’, ‘boy’, ‘,’, ‘all’, ‘work’, ‘and’, ‘no’, ‘play’]

All of them are words except the comma. Special characters are treated as separate tokens.

Tokenizing sentences

The same principle can be applied to sentences. Simply change the to sent_tokenize()

We have added two sentences to the variable data:

from nltk.tokenize import sent_tokenize, word_tokenize

data = «All work and no play makes jack dull boy. All work and no play makes jack a dull boy.»

print(sent_tokenize(data))

[‘All work and no play makes jack dull boy.’, ‘All work and no play makes jack a dull boy.’]

NLTK and arrays

from nltk.tokenize import sent_tokenize, word_tokenize

data = «All work and no play makes jack dull boy. All work and no play makes jack a dull boy.»

phrases = sent_tokenize(data)

words = word_tokenize(data)

print(phrases)

print(words)