1.4. Support Vector Machines¶

Support vector machines (SVMs) are a set of supervised learning methods used for classification , regression and outliers detection .

The advantages of support vector machines are:

- Effective in high dimensional spaces.

- Still effective in cases where number of dimensions is greater than the number of samples.

- Uses a subset of training points in the decision function (called support vectors), so it is also memory efficient.

- Versatile: different Kernel functions can be specified for the decision function. Common kernels are provided, but it is also possible to specify custom kernels.

The disadvantages of support vector machines include:

- If the number of features is much greater than the number of samples, avoid over-fitting in choosing Kernel functions and regularization term is crucial.

- SVMs do not directly provide probability estimates, these are calculated using an expensive five-fold cross-validation (see Scores and probabilities , below).

The support vector machines in scikit-learn support both dense ( numpy.ndarray and convertible to that by numpy.asarray ) and sparse (any scipy.sparse ) sample vectors as input. However, to use an SVM to make predictions for sparse data, it must have been fit on such data. For optimal performance, use C-ordered numpy.ndarray (dense) or scipy.sparse.csr_matrix (sparse) with dtype=float64 .

1.4.1. Classification¶

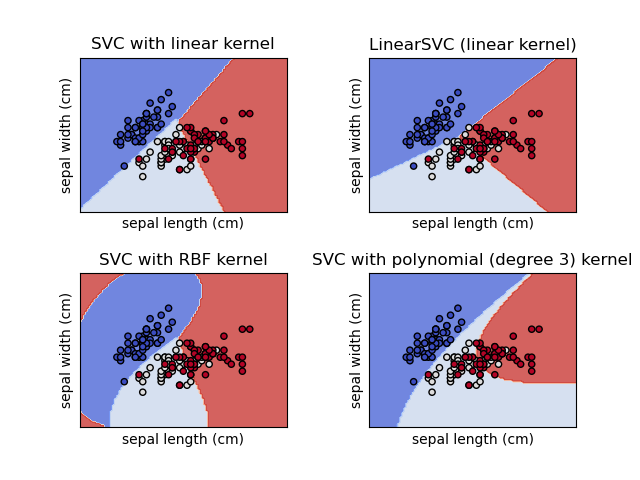

SVC , NuSVC and LinearSVC are classes capable of performing binary and multi-class classification on a dataset.

SVC and NuSVC are similar methods, but accept slightly different sets of parameters and have different mathematical formulations (see section Mathematical formulation ). On the other hand, LinearSVC is another (faster) implementation of Support Vector Classification for the case of a linear kernel. Note that LinearSVC does not accept parameter kernel , as this is assumed to be linear. It also lacks some of the attributes of SVC and NuSVC , like support_ .

As other classifiers, SVC , NuSVC and LinearSVC take as input two arrays: an array X of shape (n_samples, n_features) holding the training samples, and an array y of class labels (strings or integers), of shape (n_samples) :

>>> from sklearn import svm >>> X = [[0, 0], [1, 1]] >>> y = [0, 1] >>> clf = svm.SVC() >>> clf.fit(X, y) SVC()

After being fitted, the model can then be used to predict new values:

SVMs decision function (detailed in the Mathematical formulation ) depends on some subset of the training data, called the support vectors. Some properties of these support vectors can be found in attributes support_vectors_ , support_ and n_support_ :

>>> # get support vectors >>> clf.support_vectors_ array([[0., 0.], [1., 1.]]) >>> # get indices of support vectors >>> clf.support_ array([0, 1]. ) >>> # get number of support vectors for each class >>> clf.n_support_ array([1, 1]. )

1.4.1.1. Multi-class classification¶

SVC and NuSVC implement the “one-versus-one” approach for multi-class classification. In total, n_classes * (n_classes — 1) / 2 classifiers are constructed and each one trains data from two classes. To provide a consistent interface with other classifiers, the decision_function_shape option allows to monotonically transform the results of the “one-versus-one” classifiers to a “one-vs-rest” decision function of shape (n_samples, n_classes) .

>>> X = [[0], [1], [2], [3]] >>> Y = [0, 1, 2, 3] >>> clf = svm.SVC(decision_function_shape='ovo') >>> clf.fit(X, Y) SVC(decision_function_shape='ovo') >>> dec = clf.decision_function([[1]]) >>> dec.shape[1] # 4 classes: 4*3/2 = 6 6 >>> clf.decision_function_shape = "ovr" >>> dec = clf.decision_function([[1]]) >>> dec.shape[1] # 4 classes 4

On the other hand, LinearSVC implements “one-vs-the-rest” multi-class strategy, thus training n_classes models.

>>> lin_clf = svm.LinearSVC(dual="auto") >>> lin_clf.fit(X, Y) LinearSVC(dual='auto') >>> dec = lin_clf.decision_function([[1]]) >>> dec.shape[1] 4

See Mathematical formulation for a complete description of the decision function.

Details on multi-class strategies Click for more details

Note that the LinearSVC also implements an alternative multi-class strategy, the so-called multi-class SVM formulated by Crammer and Singer [ 16 ] , by using the option multi_class=’crammer_singer’ . In practice, one-vs-rest classification is usually preferred, since the results are mostly similar, but the runtime is significantly less.

For “one-vs-rest” LinearSVC the attributes coef_ and intercept_ have the shape (n_classes, n_features) and (n_classes,) respectively. Each row of the coefficients corresponds to one of the n_classes “one-vs-rest” classifiers and similar for the intercepts, in the order of the “one” class.

In the case of “one-vs-one” SVC and NuSVC , the layout of the attributes is a little more involved. In the case of a linear kernel, the attributes coef_ and intercept_ have the shape (n_classes * (n_classes — 1) / 2, n_features) and (n_classes * (n_classes — 1) / 2) respectively. This is similar to the layout for LinearSVC described above, with each row now corresponding to a binary classifier. The order for classes 0 to n is “0 vs 1”, “0 vs 2” , … “0 vs n”, “1 vs 2”, “1 vs 3”, “1 vs n”, . . . “n-1 vs n”.

The shape of dual_coef_ is (n_classes-1, n_SV) with a somewhat hard to grasp layout. The columns correspond to the support vectors involved in any of the n_classes * (n_classes — 1) / 2 “one-vs-one” classifiers. Each support vector v has a dual coefficient in each of the n_classes — 1 classifiers comparing the class of v against another class. Note that some, but not all, of these dual coefficients, may be zero. The n_classes — 1 entries in each column are these dual coefficients, ordered by the opposing class.

This might be clearer with an example: consider a three class problem with class 0 having three support vectors \(v^_0, v^_0, v^_0\) and class 1 and 2 having two support vectors \(v^_1, v^_1\) and \(v^_2, v^_2\) respectively. For each support vector \(v^_i\) , there are two dual coefficients. Let’s call the coefficient of support vector \(v^_i\) in the classifier between classes \(i\) and \(k\) \(\alpha^_\) . Then dual_coef_ looks like this: