Selenium get HTML source in Python

Do you want to get the HTML source code of a webpage with Python selenium? In this article you will learn how to do that.

Selenium is a Python module for browser automation. You can use it to grab HTML code, what webpages are made of: HyperText Markup Language (HTML).

What is HTML source? This is the code that is used to construct a web page. It is a markup language.

To get it, first you need to have selenium and the web driver install. You can let Python fire the web browser, open the web page URL and grab the HTML source.

Related course:

Install Selenium

To start, install the selenium module for Python.

For windows users, do this instead:

It’s recommended that you do that in a virtual environment using virtualenv.

If you use the PyCharm IDE, you can install the module from inside the IDE.

Make sure you have the web driver installed, or it will not work.

Selenium get HTML

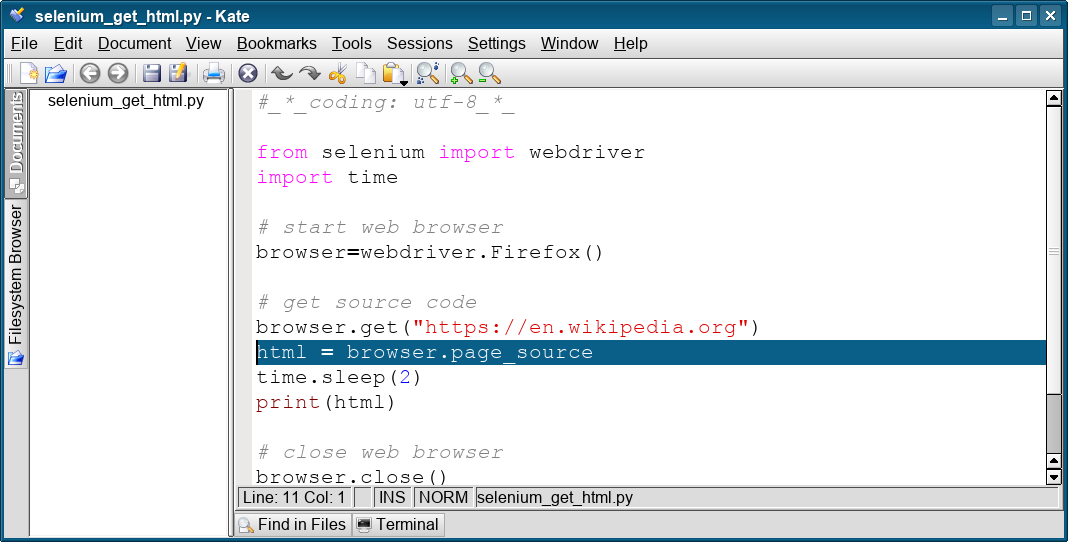

You can retrieve the HTML source of an URL with the code shown below.

It first starts the web browser (Firefox), loads the page and then outputs the HTML code.

The code below starts the Firefox web rbowser, opens a webpage with the get() method and finally stores the webpage html with browser.page_source.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

#_*_coding: utf-8_*_

from selenium import webdriver

import time

# start web browser

browser=webdriver.Firefox()

# get source code

browser.get(«https://en.wikipedia.org»)

html = browser.page_source

time.sleep(2)

print(html)

# close web browser

browser.close()

This is done in a few steps first importing selenium and the time module.

from selenium import webdriver

import time

It starts the web browser with a single line of code. In this example we use Firefox, but any of the supported browsers. will do (Chrome, Edge, PhantomJS).

# start web browser

browser=webdriver.Firefox()

The URL you want to get is opened, this just opens the link in the browser.

# get source code

browser.get(«https://en.wikipedia.org»)

Then you can use the attribute .page_source to get the HTML code.

html = browser.page_source

time.sleep(2)

print(html)

You can then optionally output the HTML source (or do something else with it).

Don’t forget to close the web browser.

# close web browser

browser.close()

1 # May need to install the requests module (‘pip install requests’) 2 # 3 # Import modules needed for this code to work 4 import requests 5 import json 6 import time 7 # See if there is an file called ‘isbn.txt’ 8 try: 9 # Open isbn text and read the ISBN’s 10 open_isbn_file = open(«isbn.txt») 11 except: 12 # Tell the user to have a file called isbn.txt in the same directory 13 print(«Please have an file called ‘isbn.txt’ in the same directory as this python file») 14 time.sleep(10) 15 # Create a list of all ISBNS in the file and print out that list 16 with open(«isbn.txt») as f: 17 list_all_books_w_isbn = f.read().splitlines() 18 print («List of all ISBN’s:») 19 print(list_all_books_w_isbn) 20 # If no ISBN is found inside the text file then let the user know that they have to place ISBN numbers inside the file 21 if not list_all_books_w_isbn: 22 print(«Please Input an ISBN numbers inside the ‘isbn.txt’ file») 23 time.sleep(10) 24 # Create a new HTML document that is going to output the book covers and information 25 open_html_file = open(«index.html», «w») 26 # Make a variable (html_content_begin) holding the html content that will begin the page 27 html_content_begin = «»» 28 29

3067

6871 «»» 72 # Finish the above html content, creating a variable called (html_content_end) 73 html_content_end = «»» «»» 74 # Write the html content that will begin the page (this includes the css) to the page 75 open_html_file .write(html_content_begin) 76 # Loop through all Isbns, reading the data and update that information to the webpage 77 print («Getting all data, this may take a minute or two») 78 for list_books in open_isbn_file: 79 80 params = [ 81 [‘bibkeys’, list_books], 82 [‘jscmd’, ‘data’], 83 [‘format’, ‘json’], 84 ] 85 # Request the information from Openlibrary 86 response = requests.get(‘https://openlibrary.org/api/books’, params=params) 87 # Make a json list of all the book data 88 book_data = response.json() 89 # Clean up the json list and print it in a easy to read format 90 print(json.dumps(book_data, indent=4)) 91 # Can openlibrary find the ISBN, if not skip the all the code below 92 if list_books in book_data: 93 print(«Yay, ISBN found») 94 else: 95 print(«ISBN not found») 96 continue 97 98 # Create variables for all the data that will appear on the webpage, reading it from the json list 99 isbn_data = book_data[list_books] 100 book_title = isbn_data[«title»] 101 book_url = isbn_data[«url»] 102 book_publish_date = isbn_data[«publish_date»] 103 104 # Is the author variable in the ISBN’s json list, if yes then find the name of the author 105 # otherwise tell the user that no author was found 106 if «authors» in isbn_data: 107 book_author = isbn_data[«authors»] 108 book_author_get_name = book_author[0] 109 book_author_name = book_author_get_name[«name»] 110 else: 111 print(«no author found») 112 113 # Create a div to hold each book on the webpage 114 open_html_file .write(«»»

ISBN:

«»») 120 open_html_file .write(«»»

«»» + list_books + «»»

«»») 121 # Check if the title is in the json list, if yes then write that title to the webpage and print that 122 # the book has been loaded. If there is no title then print no title found 123 if «title» in isbn_data: 124 open_html_file .write(«»»

Title:

«»») 125 open_html_file .write(«»»

«»» + book_title + «»»

«»») 126 print(«Loaded:») 127 print(book_title) 128 else: 129 print(«No Title found») 130 131 # Write the books url (links to the openlibraries website for that book), the books publish data and the books 132 # authors name to the webpage 133 open_html_file .write(«»»

URL:

«»») 134 open_html_file .write(«»» «»») 135 open_html_file .write(«»»

Publish date:

«»») 136 open_html_file .write(«»»

«»» + book_publish_date + «»»

«»») 137 open_html_file .write(«»»

Author:

«»») 138 open_html_file .write(«»»

«»» + book_author_name + «»»

«»») 139 140 # Check if there is a number of pages in that books json list, if there is than write the number of pages to the 141 # website. If there isn’t any number of pages than print that there is no number of pages found 142 if «number_of_pages» in isbn_data: 143 book_pages = isbn_data[«number_of_pages»] 144 open_html_file .write(«»»

Number of pages:

«»») 145 open_html_file .write(«»»

«»» + str(book_pages) + «»»

«»») 146 else: 147 print(«no number of pages found») 148 # Finish of the div created above 149 open_html_file .write(«»»

«»») 150 # Finish the html tags and print that the html content has been created 151 open_html_file .write(html_content_end) 152 print(«html content created») 153 # Close all the files opened 154 open_isbn_file.close 155 open_html_file .close 156 # Print that the user can now open the html page with their ISBNs data found .

. 24 print(«Files are read from: <>«.format(read_path)) 25 print(‘New dataset file: <>‘.format(write_file)) 26 return write_path, read_path, write_file 27 # open the research library membership HTML files 28 def open_html_file (open_file, name): 29 open_html_file .data = open(open_file + ‘/’ + name + ‘.html’) # open membership HTML page 30 open_html_file .domain = organization.split(‘.’)[1] + ‘.’ + organization.split(‘.’)[ 31 2] # parse organization name to exclude internal website links and create filename 32 open_html_file .soup = BeautifulSoup( open_html_file .data, ‘lxml’) # BeautifulSoup object containing HTML data 33 # extract and write research library names and URLs to a .CSV file 34 def get_urls(organization, num, tags, check, writer): 35 count_member = 0 36 for tag in tags[num].find_all(‘a’): 37 try: 38 if tag is not None: 39 next_url = tag.get(‘href’, None) 40 if not next_url.startswith(«http»): 41 continue 42 test_org = re.findall(check, next_url) 43 test_goog = re.findall(‘google’, next_url) 44 if ‘webjunction’ in next_url: 45 break 46 if ‘hangingtogether’ in next_url: 47 break 48 if len(test_org) + len(test_goog) == 0: 49 next_name = tag.contents[0].strip(«,») 50 count_member += 1 51 next_name = next_name.replace(‘,’, ») 52 print(‘<> member <> — <> @ <>‘.format(organization, count_member, next_name, next_url)) 53 writer.writerow([organization, next_name, next_url]) 54 except Exception as e: 55 print 56 print(«something is wrong with the HTML tag <>: <>«.format(tag, e)) 57 print 58 # list the membership organization urls 59 organizations = [‘www.arl.org’, ‘www.diglib.org’, ‘www.oclc.org’] 60 # set read / write path and file names 61 write_path, read_path, write_file = pick_dataset(‘2018-07-30T15_45_32.751109’) 62 with open(write_file, ‘w’) as toWrite: 63 writer = csv.writer(toWrite, delimiter=’,’) 64 writer.writerow([‘membership’, ‘school’, ‘url’]) 65 for organization in organizations: 66 count = 0 67 open_html_file (read_path, organization) 68 # find HTML tags encasing Membership data 69 if organization == organizations[0]: 70 tags = open_html_file .soup.find_all(‘div’, class_=’article-content’) # ARL 71 elif organization == organizations[1]: 72 tags = open_html_file .soup.find_all(‘div’, class_=re.compile(‘entry-content’)) # DLF 73 else: 74 tags = open_html_file .soup.find_all(‘div’, class_=’text parbase section’) # OCLC 75 for i in tags: 76 get_urls(organization, count, tags, open_html_file .domain, writer) .

. 7 # def FindFileList(self): 8 # »’è·åæ件å表»’ 9 # for Root,Dirs,Files in os.walk(self.Filedir): 10 # return Files 11 def open_html_file (self,FileName): 12 »’æå¼Htmlæ件,并è¿åæ件handle»’ 13 FileDir=self.Filedir+’/’+FileName 14 with open(FileDir,encoding=’utf-8′) as file: 15 HtmlHandle=file.read() 16 return HtmlHandle 17 def analyze_html(self,File): 18 »’åæHtml,è¿åæå®èç¹å 容»’ 19 # Files=self.FindFileList() 20 # for File in Files: 21 FileContent=self. open_html_file (File) 22 soup=BeautifulSoup(FileContent,»html.parser») 23 ulist=[] 24 for tr in soup.find(‘tbody’).children: 25 if isinstance(tr,bs4.element.Tag): 26 tds = tr(‘td’) 27 BugId=tds[0].find(‘a’) 28 Title=tds[3][‘title’] 29 ConfirmOrNot=tds[3].find(‘span’).string 30 BugTitleAndConfirmOrNot=ConfirmOrNot+» «+Title 31 ulist.append([int(BugId.string), int(tds[1].string),BugTitleAndConfirmOrNot,tds[4].string]) 32 return ulist 33 if __name__==’__main__’: 34 parentdir = os.path.dirname(__file__) 35 filedir=parentdir+r»/html» .

Automation Testing Tutorials

Learn to execute automation testing from scratch with LambdaTest Learning Hub. Right from setting up the prerequisites to run your first automation test, to following best practices and diving deeper into advanced test scenarios. LambdaTest Learning Hubs compile a list of step-by-step guides to help you be proficient with different test automation frameworks i.e. Selenium, Cypress, TestNG etc.

LambdaTest Learning Hubs:

- JUnit Tutorial

- TestNG Tutorial

- Webdriver Tutorial

- WebDriverIO Tutorial

- Protractor Tutorial

- Selenium 4 Tutorial

- Jenkins Tutorial

- NUnit Tutorial

- Jest Tutorial

- Playwright Tutorial

- Cypress Tutorial

- PyTest Tutorial

YouTube

You could also refer to video tutorials over LambdaTest YouTube channel to get step by step demonstration from industry experts.

Run SeleniumBase automation tests on LambdaTest cloud grid

Perform automation testing on 3000+ real desktop and mobile devices online.